TECHNOLOGIES, BODIES & TERRITORIES

Digital technologies are gradually integrating our bodies and territories. Terminologies such as Biometric Identification, Smart Cities, Big Data, A.I, Internet of Things and VR are gradually showing up in public discourses as innovative solutions to improve public services and national security, but they can also pose a significant threat to citizen's privacy, freedom of expression, right to association, among others, besides leaving us all vulnerable to yet unknown kinds of cyber attacks. How do we walk freely in a territory surveilled by both companies and governments? How are all these new technologies changing our relations to our bodies and the territories we inhabit? Who are mining the data we produce in this relations? Who profit from it? Are we restating colonial relationships disguised as innovation if we consider the territoriality of the production cycle of all the electronics we consume? If we consider the social environmental impact of the extractivism and mining of electronic components, of the amount of electricity spent on a wide quantity of servers needed in a surveillance capitalism economy and of disposal e-waste, how sustainable it can be to keep scaling up the production of the amount of programed obsolescence technologies what we consume today? Are smart cities actually meant to be fueled by social environmental conflicts, colonialism, and waste?

Research: "Facial Recognition in the Public Sector and Transgender identities"

In collaboration with researcher and trans activist Mariah Rafaela, we interviewed transgender activists to understand current challenges and victories to ensure their right to identity and map news challenges brought by facial recognition technologies once deployed by the public sector to authenticate identities. This research was developed with the support of Privacy International and launched in a webinar with participation of Brazilian computer scientist Nina Da Hora, Nanda Monteiro and transfeminist activist Viviane Vergueiro. An English version of the main findings is also available.

#Facial Recognition #LGBTQIA rights #Surveillance #Racism #Technosolutionism

Webseries: "From Devices to Bodies"

Series of short documentary films portraying conversations with women and gender non-conforming experts reflecting about privacy implications around technologies that use our bodies as data sources. Launched in 2021, with support from Heinrich Böll Stiftung Foundation in Brazil, the first episode is about DNA Data Collection and the 2nd video is a debate on human rights implications of using facial recognition in the public sector to authenticate identities or under the narratives of public safety.

#DNA collection #Surveillance #Facial Recognition #Public Security #Technosolutionism

Podcast: “Facial Recognition: Automating Oppression?”

what happens when we use a binary algorithm to control and influence non-binary and very diverse lives and experiences? Who gets excluded? What historical oppressions are being exacerbated and automated? Vanessa Koetz and Bianca Kremer, both Coding Rights fellows on Data and Feminisms, talked to two Brazilian experts: Mariah Rafaela Silva, scholar and activist in transgender rights and to Pablo Nunes, black scholar and activist specialist in public security and racism. Through this podcast, we will discuss the risks of implementing this kind of tech without an informed public debate about potential consequences. We even spoke to an algorithm, Dona Algô (Misses Algô… short for algorithm), fictional character of the content producer Helen Fernandez, known on social media as @Malfeitona. Produced by Coding Rights for the series Privacy is Globalthe, it is available in English and Portuguese.

#Facial Recognition #Racism #LGBTQIA rights #Surveillance #Algorithms #Public Security #Technosolutionism

Article: “Ancestry, Welfare and Massive Identification in DNA data collection — solution or trap?”

An article about the dangers of DNA surveillance, of popularizing DNA testing and the pitfalls behind the ancestry debate promoted by private companies. Currently published in Portuguese and written by Mari Tamari and Joana Varon for the project the From Devices to Bodies. To showcase the article, Mari Tamari participated in the "Ultra-Sensitive Data: How to Deal?" panel, sponsored by Tilt Uol at The Developer's Conference.

#DNA collection #Surveillance #Public Security #Technosolutionism

Article: Big Tech goes green(washing)

Gradually, technologies and tech companies have entered the environmental debate. Inspired by decolonial feminist theories and practices, the article "Big Techs go Green (washing): Feminist lenses to unveil new tools in the Master’s Houses", shed a light on how Big Tech companies have dangerously mixed the technosolutionist narratives with Green Economy approaches to climate change. The research was developed in an autonomous collaboration between the authors Camila Nobrega and Joana Varon, and published in Giswatch 2021 from APC. Through out the year, it was republished in the second issue of Branch Magazine, a publication by Climate Action Tech, and also translated to Spanish by Alainet. Moreover, the article was featured in the zine The Cost of Convenience, organized by Minderoo Centre for Technology and Democracy at Cambridge University. The authors also produced a short-video with some of the highlights. The article provocations continue to be developed by the authors as a partnership between Beyond the Green, innitiated by Camila Nobrega, and Coding Rights.

#ClimateChange #Greenwashing #GreenEconomy #Technosolutionisms #Socio-EnvironmentalJustice

Research: Facial Recognition in Latin America: trends in the implementation of a perverse technology

In partnership with other organizations that are part of Al Sur consortium, the objective of the research was to map in detail the existing initiatives in the region, with special emphasis on identifying the companies providing the dominant biometric technologies and their countries of origin, the type of relationship established with the states, the areas in which their presence predominates and their potential social, economic and political consequences. In English, Portuguese, and Spanish versions.

#Surveillance #Facial Recognition #Technosolutionism #Public Security

HACKING PATRIARCHAL VIOLENCES & ENVISIONING FEMINIST FUTURES

Technologies are normally designed and embedded with subjective values from the ones who develop them. Therefore, the future is likely to replicate many of the inequalities that social justice movements fight against if we do not expose the intersectional power imbalances, pertaining race, gender, class, etc, as well as the geopolitics, behind digital technologies. To redress this scenario, this area has the goal to map different expressions of patriarchy into development and usage of digital technologies, engage in processes for awareness raising and political change, as well as to promote exercises of speculative futures and experimentation in which technologies can be developed under transfeminist values. Here is some of 2021’s outcomes from our work in this area:

Research: “Why is Artificial Intelligence a feminist issue?”

This is the key question of Not My A.I., an ongoing project that seeks to contribute to the development of a feminist toolkit to question algorithmic decisions making systems that are being deployed by the so-called Digital Welfare States. In partnership, Paz Peña and Joana Varon mapped a series of AI projects being deployed by the Public sector in Latin America and then conducted indeep research to develop a case-based framework to question theses projects. Currently, the research outcomes work as a tool in workshops focused on expanding feminists debates around. First phase of the project was developed with support of FIRN - Feminist Internet Research Network, from APC, while Heinrich Boll Stiftung Brazil enabled us to launch a Portuguese version to kick start workshops in Brazil, soon to be organized in Latin America as well. The project has also inspired the episode “Is Artificial Intelligence a Feminist Issue?” in the podcast series Privacy Is Global, by Internews.

#Artificial Intelligence #Technosolutionism #Digital Welfare States #Feminist Theories

Platform: the Oracle for Transfeminist Technologies

The Oracle is a card deck used in workshops as a tool for speculative futures and technologies embedded with transfeminist values. The project is an initiative by Coding Rights and Sasha Costanza-Chock, through Design Justice Network. In 2021, we continued to hold a series of workshops using the Oracle: among other meetings, we gathered to play it at the play session of Mozilla Festival “(Re)figuring feminist futures: Alternative Economies”, in the workshop “(Re)Figuring Futures + Transfeminist Oracle: Redux!”, organized by Jac, Joana Varon and Clarote; at the Feminist Future(s) hackaton at the MIT; at The New New workshop on Speculative Futures, organized by superrr.net; at the track the future of Politics of Code, at Kampnagel festival in Hamburg, which we helped curate; and with Brazilian activists during the Not My AI and Gincana Monstra workshops.

#Design Justice #Codesign #Feminist Futures #algorithmic literacy lab

Platform: Launching of M.A.M.I.

A museum from the future, set in 3021 to exhibit pieces of feminist resistance against different forms of patriarchal violences. The idea for this project emerged in a workshop in Santiago de Chile, with a group of feminists artists and activists and support from Hivos. Currently, all the participants have joined the Matronato, running M.A.M.I. as a collective project. In 2021, it was launched on the webinar with the participation of feminist artists Senoritaugarte, Lucía Egaña, Anamhoo, Maka, Kalogatias, Mama Tati and Joana Varon, who are part of Matronato. Later in the year, M.A.M.I. was also showcased in the Future of Code Politics, a track co-curated by us as part of the International Summer Festival Kampnagel, held in a hybrid format between Hamburg and online.

#Gender Violence #Art #Feminist Futures

Article: “Artificial Intelligence and Consent: a feminist anti-colonial critique”

A peer-reviewed article by Joana Varon and Paz Peña published in the special issue about Feminist Data Protection of the Internet Policy Review, a publication from Alexander Von Humboldt Institute for Internet and Society.

#Artificial Intelligence #Data Protection #Feminist Theories #Consent

Article: “Consent to our Data Bodies: Lessons from feminist theories to enforce data protection”

The article written by Joana Varon and Paz Pena was republished in the book Ciberfeminismos 3.0, a publication from the Research Group on Gender, Digital Technologies and Culture (Gig@/UFBA).

#Data Protection #Feminist Theories #Consent

Article: Beautifying or Whitening?

How social media filters reinforce and reproduce racist beauty standards? Despite being quite common, the use of image editing tools on social media can hide a serious issue: the reinforcement of a racist standard of beauty. With this article, the author Erly Guedes seeks to propose some questions about the relationship between Instagram filters and the reproduction of racism on social media platforms. Continuously devaluing the body marks of black people, which need to be hidden and retouched even digitally, society and a myriad of technological devices, such as social media and their filters, formulate and permanently reproduce hegemonic models of beauty based on whiteness.

#Racism #Algorithms

DIGITAL SECURITY/CUIDADOS DIGITAIS

With the increase in digitalization, which was particularly accelerated during the pandemics of COVID-19, we have observed an increase of online attacks with severe consequences to offline activities of human rights activists. In Brazil, this scenario is even more challenging considering the rise of far-right groups who are tech savvy and use digital environments as battlefields attacking women, LGBTQIA people, black activists, sexual and reproductive rights advocates, land defenders, activists from the favelas and other communities that question the status quo of the white cis male heteronormativity of the dominant capitalist society. This trend, aligned with the business model of mainstream social media platforms, which is focused on turning hate into profit, results in digital environments prone to gender-based political violence, hate, threats and misinformation. In this area we focus on keeping track of emerging manifestations of digital violences and fostering a technopolitical and critical view of the tools we use for our daily activism, so we can have a more careful and strategic use of them and gradually shift towards supporting feminist infrastructures.

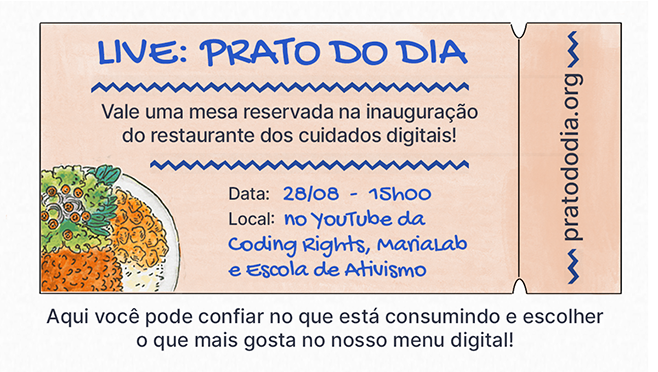

Platform: Prato do Dia

This website bring insights about both digital and food security as critical tools for feminist revolution. It is an innitiative by the Network of Transfeminist Digital Security Trainers and was launched in a webinar with participation of activists Luara Dal Chiavon from MST, Steffania Paola from Cl4ndestina, Natália Lobo from Sempreviva and our fellow Bianca Kremer.

#Food Security #Digital Security

Transfeminist Network of Digital Security Trainers

It is part of our key activities in cuidados digitais to continue to engagement with this amazingly inspiring network that we helped to create and is growing stronger. Activities entail cooperations to address rapid responses to attacks, for developing joint project proposals and for adapting methodologies and projects to the pandemic context and debates pertaining to governance and sustainability of the network. In 2021, beyond helping in the concept and communications of Prato do Dia, we also facilitated two workshops in Gincana Monstra.

#feminist infrastructure #cybersecurity #cuidadosdigitais #communitybuilding

Observatório de Violências LGBTI+ em Favelas

Through community engagement, the Observatory was the goal to map and monitor violences targeting LGBTI+ people living in favelas of Rio de Janeiro. Conducted my Grupo Conexão G, with support of Fundo Brasil and the Universities of Manchester, Coventry, PUC-Rio and Universidade Federal do Rio de Janeiro, it is developed also in partnership with DataLabe, Race and Equality and Coding Rights. Favelas do not significantly enter into the State's calculations for the promotion of public policies, since it operates in the maintenance of projects of invisibility and killing. Therefore, the production of data about the quality (and the possibility) of life of LGBTI+ populations in the favelas is a fundamental tool in the face of the discredit of political leaders regarding the precarious conditions LBGTI+ people are subjected to.

Podcast: Vigilância, privacidade e segurança digital

In the sixth episode of the Radio Juv podcast (OXFAM Brazil), "Vigilância, privacidade e segurança digital", Joana Varon and the co-executive director of Olabi, creator of PretaLab, and researcher in technologies and society Sil Bahia talk about surveillance, privacy, and digital security.

Research: "Gender-based political violence on the internet"

In this report, the authors Ladyane Souza and Joana Varon, seek to provide a regional perspective on gender-based political violence that unfolds in digital environments to offer concrete recommendations to the electoral judicial system, to Internet platforms, candidates, parties and civil society advocate. It was written by Coding Rights in collaboration with AL SUR network in a project with Fondo Indela and is available in English, Portuguese and Spanish.

#GenderBasedPoliticalViolence #Elections #Political Violence

EMERGENCY RESPONSES TO PUBLIC POLICIES

Sometimes, shifts in the political context requires emergency actions to ensure that research outcomes and human rights and feminist approaches to technologies can guide advocacy strategies. A draft bill, a public hearing, a consultation, a bill recently approaved, a windowm of opportunity to bring a topic to the policy making agenda, all entail rapid responses from civil society. And, as the internet is global, to do that, we nurture national, regional and global coalitions and/or networks to have strategic and collective engagement within policy makers in national, regional and international fora.

Fellowship: Data Protection & Feminisms

Coding Rights advocacy capacities has increased in 2021! Two amazing researchers, Bianca Kremer and Vanessa Koetz, were selected to be part of the project ‘Advocating for Data Accountability, Protection and Transparency’ (ADAPT), a partnership with Internews. In this project, they participate in an international articulation of partner organizations from African and Latin American countries and bring feminist analysis to the debates on data protection and privacy.

#DataProtection #DataAccountability #Advocacy

Banning facial recognition in public security

We are actively participating in the building of a campaign for banning facial recognition in public security through Digital Rights Network’s Coalition. Furthermore, in cooperation with MediaLab/UFRJ, @idecbr and CESec we have participated in the elaboration of a Municipal and a State bill proposing to ban the use of facial recognition for public segurity in the city and state of Rio de Janeiro.

#FacialRecognition #Racism #PublicSecurity

Public hearing on AI at the National Congress

Our fellow, Bianca Kremer, participated at Public hearing on Artificial Intelligence at the Science and Technology Committee of the Deputy Chamber. The debate was about draft bill 21/2020, which defines principles, rights and responsibilities for the use of AI in Brazil.

#ArtificialIntelligence #AI

Criticism towards the changes in WhatsApp Privacy Policies

WhatsApp began notifying consumers in early January 2021 about changes to its terms of use and privacy policy. To demand the global suspension of these changes, Coding Rights attended a series of meetings with policy makers, whatsapp and civil society to pressure the company, while also got engaged in the draft of two documents:

a) Statement of Al Sur and Latin American civil society organizations on the new WhatsApp data policy.

b) Statement by the Coalizão Direitos na Rede about the WhatsApp privacy policy update

#DataProtection

Blog post: National Security Law: Dictatorship’s remaining garbage can be repealed, but criminalization logic persists

In the blog post written by our fellow Vanessa Koetz we pointed out to problems of using vague terminologies in the definition of crimes in reviews of National Security Law, creating a legal uncertainty able to criminalize practices common to civil society advocates.

#Surveillance

Contribution to the regulation on Security Incidents from the National Data Protection Authority

Participation in the expert meeting about obligations to inform security incidents, organized by National Data Protection Authority (ANPD). Coding Rights was invited to provide input on two questions:

a) What would be the possible exceptions to the obligation to inform the ANPD? and

b) What would be the possible exceptions to the obligation to inform the data holders?

To answer them, in partnership with computer scientist and cybersecurity expert Rafaella Nunes, we prepared a Technical Note sent to the Agency.

#DataProtection

Critical input to question the implementation of “Cadastro Base do Cidadão”

Coding Rights got invited to participate in a private meeting with the Brazilian Digital Government Secretariat to present the recommendations of our report Cadastro Base do Cidadão: the mega database. This study focused on understanding the stage of implementation of that mega database created by a Decree that presents several inconsistencies with the General Data Protection Law (LGPD).

#DataProtection

COVID-19 Observatory

This initative by AL Sur seeks to analyze and systematize government measures (including public-private partnerships) related to the implementation of surveillance technologies and data collection in the context of COVID-19, questioning its possible impacts in terms of privacy and surveillance.

#Surveillance #Data Protection #COVID

Desinformation and Intermediary Liability

AL SUR working group on intermediary liability and disinformation.

Radar Legislativo

A database of draft bills proposed in the National Congress related to technology and human rights. It was envisioned with the goal to capture the bigger picture of how digital rights are being in the legislative.

Public Hearing about the installation of facial recognition cameras within the Rioluz Project

Our data and feminism fellows participated the public hearing in the Municipal Council of Rio de Janeiro.

ABNT Special Commission for Sustainable Cities

Vanessa Koetz is engaged in the commission that debates Brazilian ISO regulations related to smart cities and infrastructures.

ONLINE CONFERENCES AND WORKSHOPS IN 2021

CR team participated in 37 webinars, conferences and workshops

<< scroll to see more >>

AWARDS + FELLOWSHIPS IN 2021

Joana Varon, Coding Rights Founder Directress, was nominated by Apolitical as one of the 100 Most Influential People in Gender Policy 2021, in the category Technology and Innovation. Apolitical is a peer-to-peer learning platform for governments, used by public servants and policymakers in more than 170 countries.

Renewed Tech and Human Rights fellowship within the Carr Center for Human Rights Policy at Harvard Kennedy School and affiliation at Berkman Klein Center for Internet and Society at Harvard Law School

MEDIA IN 2021

11,881

followers

8,715

followers

4,632

followers

CR team was interviewed or collaborated with at least 25 media articles from Brazil and around the world.

<< sidescroll for our highlights >>

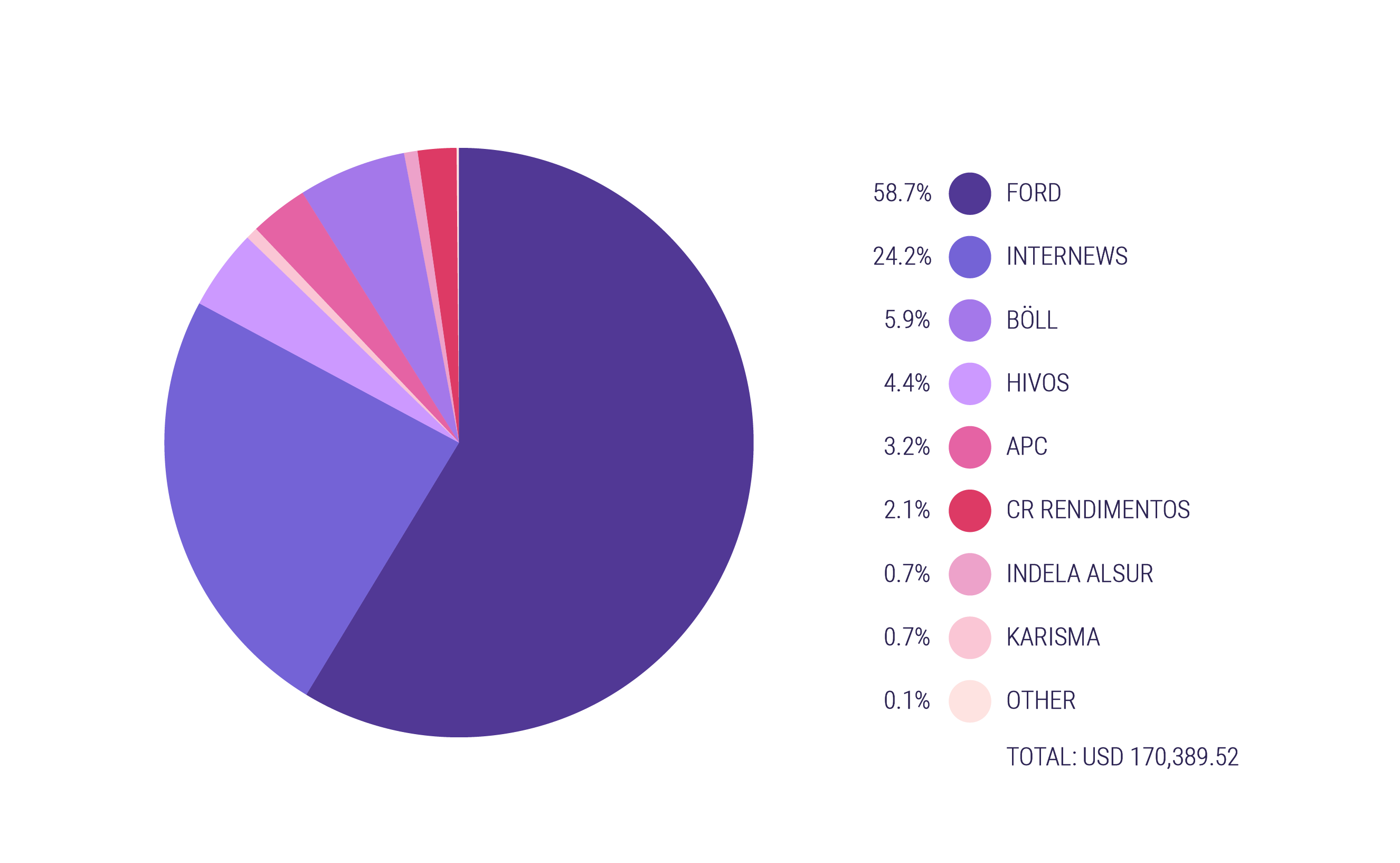

FINANCIAL IN 2021

REGULAR ENGAGEMENT IN THE FOLLOWING NETWORKS IN 2021

Transfeminist Network of Digital Security Trainers

CORE TEAM 2021

JOANA VARON

EXECUTIVE DIRECTRESS

CREATIVE CHAOS CATALYST

JULIANA MASTRASCUSA

COMMUNICATIONS DIRECTRESS AND STRATEGIST

VANESSA KOETZ

GENERAL PROJECTS MANAGEMENT DIRECTRESS

FELLOW IN DATA PROTECTION AND FEMINISMS

BIANCA KREMER

FELLOW IN DATA PROTECTION

AND FEMINISMS

NANDA MONTEIRO

SYSADMANA

DIGITAL SECURITY

CLAROTE

ART DIRECTRESS

DESIGNER+ILLUSTRATOR

ERLY GUEDES

COMMUNICATIOS MANAGER

MAX HOLENDER

FINANCIAL MANAGER